AuTom and Jerry

Introduction

The project is inspired by the “Push-Button Kitty” episode of Tom and Jerry. It’s a mobile intelligent robot system that can detect and follow targets in an unknown environment without collision.

Methodology

The goal of the project is to autonomously identify, follow, and catch bugs in an unknown environment while avoiding obstacle collision with an MBot. Firstly, MBot needs a reliable method to find the bugs in real-time and calculate the pose of the bugs with computer vision. Secondly, the MBot needs to plan a safe path to the bug, which can be achieved with SLAM. Thirdly, the MBot needs to follow the bug and reduce the distance to it with vision tracking and PID controller. The following subsections discuss the approach to solving the individual tasks.

Component List

The standard MBot on which the system is built has a Raspberry Pi, a Beaglebone, a lidar, a two-wheeled motor, and an on-board PiCam. Besides, in order to achieve the goal, two additional 720p cameras with 100 degree view need to be purchased to act as the replacement of PiCam to increase the detection range.

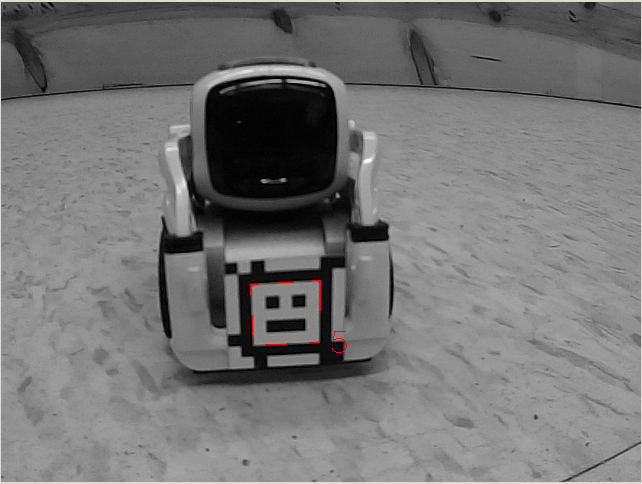

Vision Tracking

AprilTags are applied to mark the bugs for real-time tracking. The on-board cameras can detect the AprilTags and calculate the poses of the bugs. The MBot can then transform the poses in the camera frame to the SLAM coordinate frame.

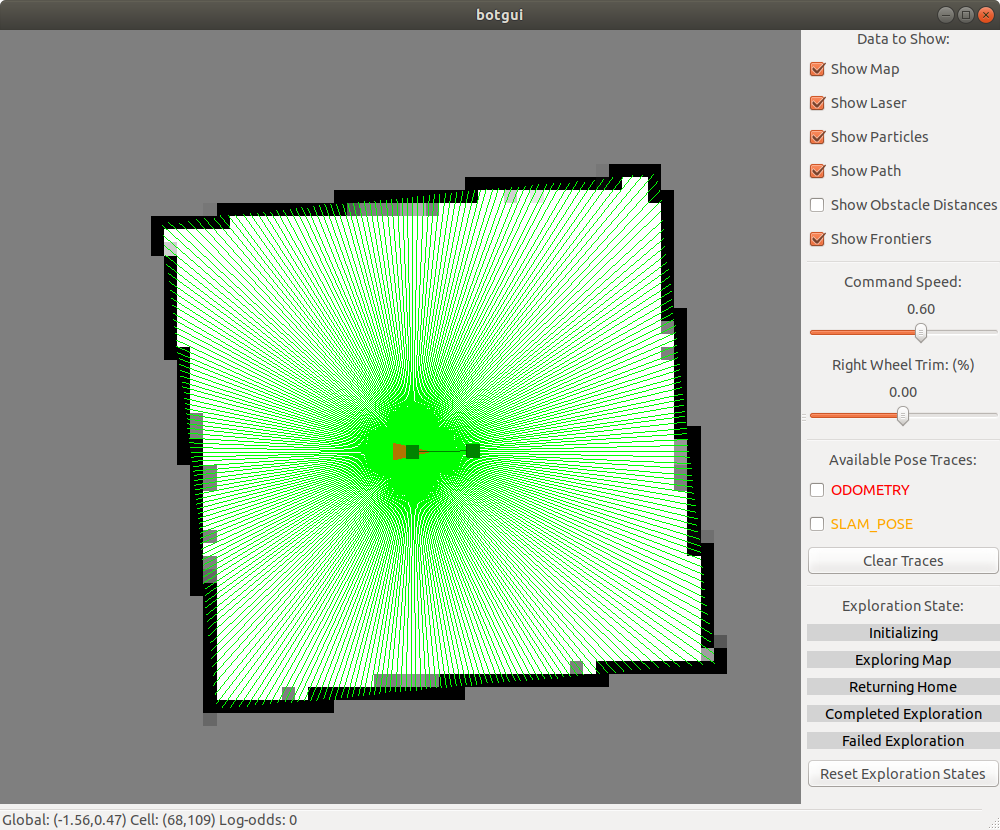

Motion Planning

When the robot spots a bug and its corresponding AprilTag, it will generate a goal position for the robot to go to. The way the robot travels to that position will be determined via the A Star path planning algorithm. The algorithm uses the occupancy grid built up by SLAM to calculate the most cost-effective path to the target. Then the robot will move to the position with the help of a finely-tuned PID (Proportional Integral Derivative) controller.

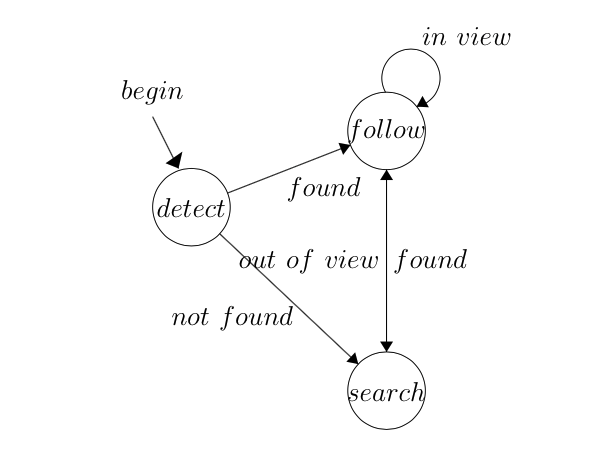

Finite State Machine

Since the camera provides limited local frame of reference and the algorithm needs time to calculate the path, it’s necessary to develop a robust FSM for different states in the whole process. The first state should be looking for the target in the current camera frame. If the program detects the target, then it should enter the follow state and keep following it until the target is reached. If the moving target escapes the field of view, FSM will be switched and the robot will turn in the direction of the motion of the target or move to broaden the current view to search for it. When the target is detected again, the FSM will again enter the follow state.