AuTom and Jerry

Autonomous Robot for Target Detection and Pursuit

Overview

AuTom and Jerry is an autonomous robotic system inspired by the classic “Push-Button Kitty” episode of Tom and Jerry. The project demonstrates an intelligent mobile robot capable of detecting, tracking, and approaching moving targets in unknown environments while avoiding obstacles.

Key Capabilities:

- Real-time target detection and tracking

- Autonomous navigation with obstacle avoidance

- Dynamic path planning in unknown environments

- Robust state management for various operational scenarios

System Architecture

Hardware Components

The system is built on a standard MBot platform with the following components:

Core Components:

- Raspberry Pi (main processing unit)

- BeagleBone (auxiliary processing)

- LiDAR sensor (environmental mapping)

- Two-wheeled differential drive system

- On-board camera system

Enhanced Components:

- Two additional 720p cameras with 100° field of view

- Replaced standard PiCam to increase detection range and coverage

Software Framework

The system integrates several key technologies:

Computer Vision:

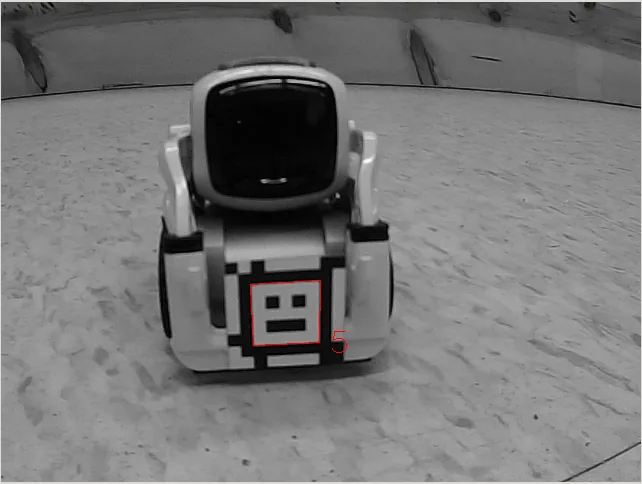

- AprilTag detection for target identification

- Real-time pose estimation

- Multi-camera sensor fusion

Navigation & Planning:

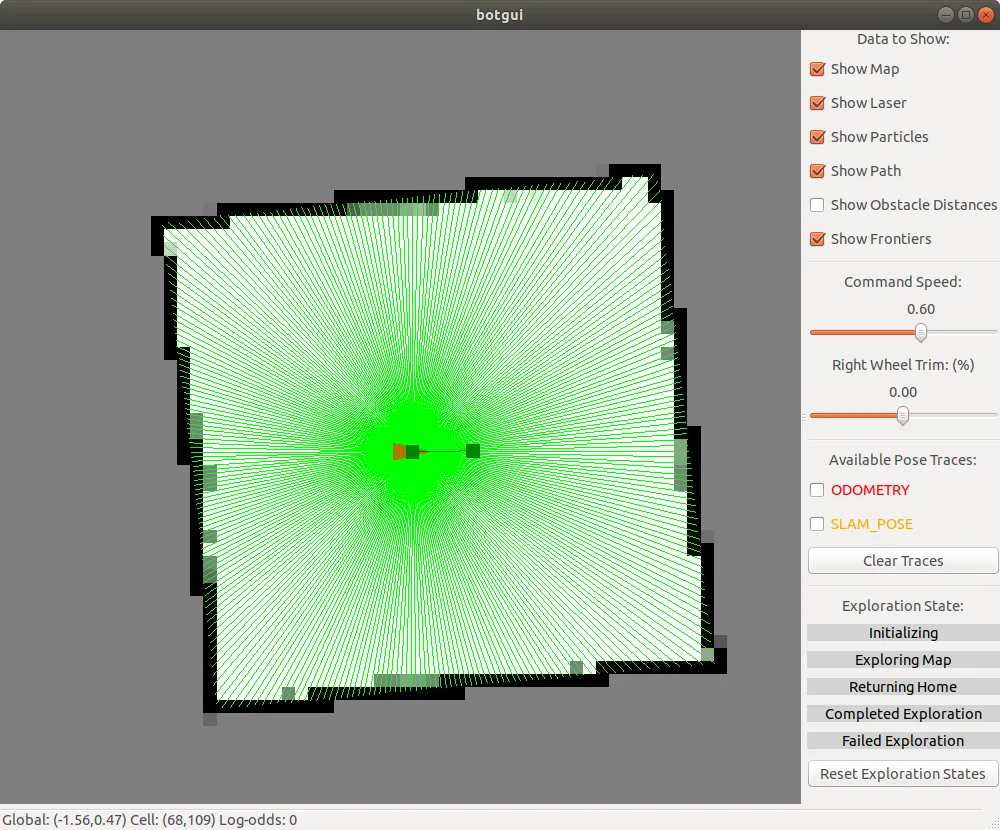

- Simultaneous Localization and Mapping (SLAM)

- A* pathfinding algorithm

- PID control for precise motion

State Management:

- Finite State Machine (FSM) for behavioral control

- Robust handling of target loss and recovery

Technical Implementation

Target Detection and Tracking

The vision system uses AprilTags as fiducial markers for reliable target identification. The multi-camera setup provides:

- Wide-angle coverage: 100° field of view per camera

- Real-time pose calculation: 6-DOF target positioning

- Coordinate transformation: Camera frame to SLAM coordinate frame

- Robust tracking: Handles partial occlusion and varying lighting

Motion Planning and Control

Path Planning:

- Utilizes A* algorithm for optimal path generation

- Integrates SLAM-generated occupancy grid for obstacle awareness

- Considers dynamic target movement in planning decisions

Control System:

- Fine-tuned PID controller for smooth motion execution

- Differential drive control for precise maneuvering

- Real-time velocity and heading adjustments

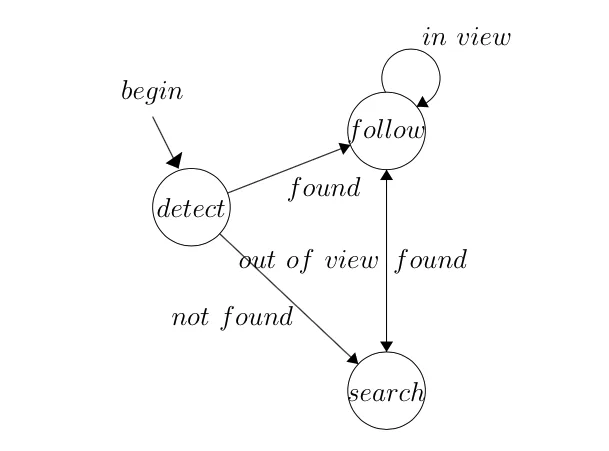

Behavioral State Machine

The FSM manages robot behavior across different operational scenarios:

Primary States:

Search State

- Systematic environment scanning

- Target detection within camera field of view

- Transition to Follow state upon target acquisition

Follow State

- Continuous target tracking

- Dynamic path replanning

- Collision avoidance integration

Recovery State

- Activated when target leaves field of view

- Predictive turning based on target’s last known trajectory

- View expansion maneuvers to reacquire target

State Transitions:

- Target detected: Search → Follow

- Target lost: Follow → Recovery

- Target reacquired: Recovery → Follow

Results and Performance

The system successfully demonstrates autonomous target pursuit with the following characteristics:

- Reliable detection: Consistent AprilTag recognition across varying conditions

- Smooth navigation: Collision-free movement in cluttered environments

- Adaptive behavior: Robust recovery from target loss scenarios

- Real-time performance: Low-latency response to dynamic target movement

Technical Challenges and Solutions

Challenge 1: Limited Field of View

- Solution: Multi-camera setup with wide-angle lenses

Challenge 2: Real-time Path Planning

- Solution: Efficient A* implementation with SLAM integration

Challenge 3: Target Loss Recovery

- Solution: Predictive FSM with intelligent search patterns

Future Enhancements

Potential improvements for the system include:

- Advanced Prediction: Machine learning for target trajectory prediction

- Multi-target Tracking: Simultaneous pursuit of multiple targets

- Enhanced Sensors: Integration of additional sensor modalities

- Collaborative Robotics: Multi-robot coordination capabilities

Demo

This project demonstrates the integration of computer vision, autonomous navigation, and intelligent control systems in a practical robotic application.